In this article, we’ll delve into Kubernetes’ essential components: Ingress and Ingress Controllers.

These components play a vital role in managing external access, DNS routing, and SSL certificates within the cluster. Understanding these components is crucial for orchestrating seamless traffic routing and service exposure.

Please visit my previous article regarding Kubernetes networking to gain some networking basics.

networking-in-Kubernetes-simplified

What is ingress in Kubernetes?

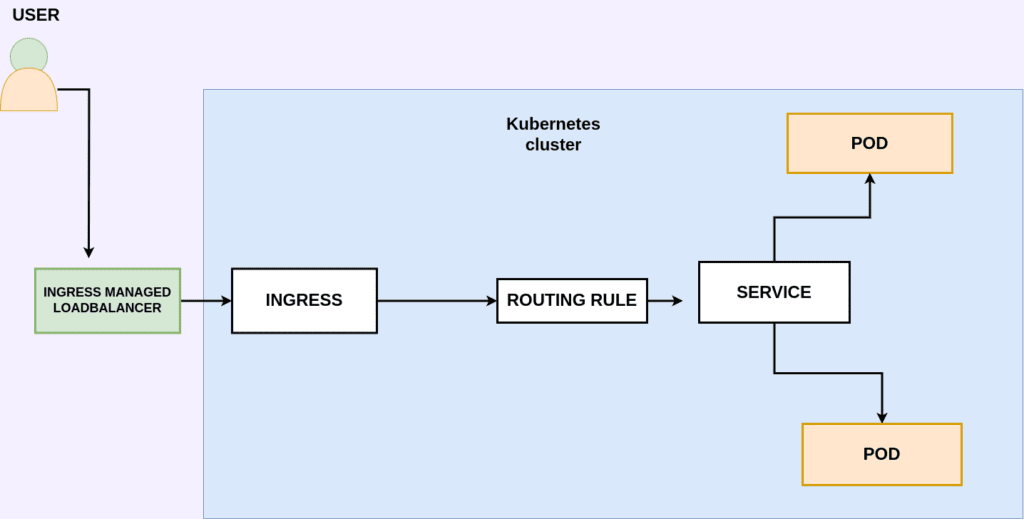

Ingress is traffic entering any system/network, in our case, the traffic entering the Kubernetes cluster. When we need to expose an application to the external world, we typically use either a load balancer or a node port service type.

NodePort is a configuration setting declared in a service’s YAML. By setting the service spec’s type to NodePort, Kubernetes allocates a specific port on each Node to that service, forwarding any requests to your cluster on that port to the service.

You can configure a service to be of type LoadBalancer in the same manner as NodePort—by specifying the type property in the service’s YAML. However, there needs to be external load balancer functionality in the cluster, typically provided by a cloud provider.

While both methods work, they have their disadvantages. If you’re using a node port, the node’s IP address may change due to node scheduling and rescheduling within the cluster. Consequently, each time the IP changes, you need to update it to access your application. If you’re using a domain name, you may need to configure additional options like dynamic DNS to dynamically update the IP address.

With the LoadBalancer type, the application is accessible via the load balancer’s IP address. While this works well, if you have multiple applications, you may need to use another load balancer to map those services separately. This is because the same IP address cannot be used for two services simultaneously. So provisioning a new load balancer for each application is expensive

Ingress, on the other hand, is a completely independent resource to your service. You declare, create and destroy it separately to your services.

An ingress is used if we have multiple services on our cluster and we need to route the traffic based on the URL path using a single load balancer IP address.

For example, you have domain.com/app that must be routed to one service and domain.com/login must be directed to another.

These routings are done by the ingress controller. Ingress is not another type of service it is an entry point that sits in front of multiple services in the cluster. It can be defined as a collection of routing rules that govern how external users access services running inside a Kubernetes cluster.

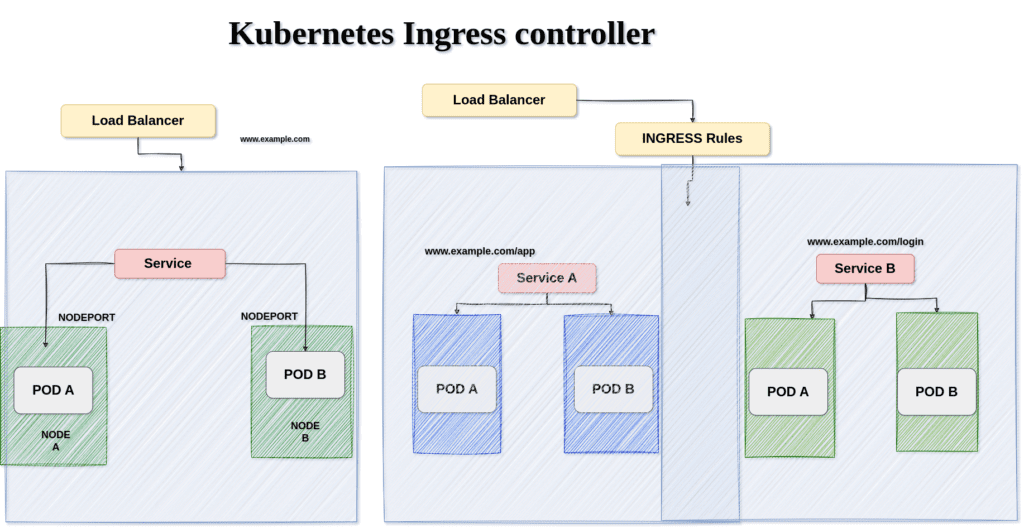

The above image is just a high-level overview of how the traffic flows to the pods with and without the ingress controller.

The image on the left side indicates the flow of traffic without the ingress controller.

An external user first hits the domain name example.com and the domain name is mapped to the load balancer IP address hence the request comes to the load balancer then to the service that is connected to that particular load balancer and finally to the application pods.

As mentioned earlier if I need to access another application with the same cluster or another host path say example.com/login I need to deploy another load balancer using the same architecture.

As shown in the image on the right side using the ingress controller and set of rules I can route my traffic from the external user to the mentioned application pods using only one load balancer.

What is a Kubernetes ingress Resource?

An ingress resource is similar to a Kubernetes resource such as a pod, services, etc which are created by the user for specific use cases. Using this Resource you can map the external traffic to the internal Kubernetes endpoints.

An Ingress needs apiVersion, kind, metadata and spec fields.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example-ingress-resource

spec:

rules:

- host: example.com

http:

paths:

- path: /app

pathType: Prefix

backend:

service:

name: app-service

port:

number: 8000

In this sample ingress resource, it routes all the traffic that enters when we hit the example.com/app URL to the app-service backend.

You can add multiple routing endpoints for path-based routing, you can add TLS configuration, etc

The name of an Ingress object must be a valid DNS subdomain name. For general information about working with config files, see deploying applications, configuring containers, and managing resources. Ingress frequently uses annotations to configure some options depending on the Ingress controller, an example of which is the rewrite-target annotation. Different Ingress

You must have an Ingress controller to satisfy an Ingress. Only creating an Ingress resource has no effect.

You may need to deploy an Ingress controller such as ingress-nginx. You can choose from several Ingress controllers.

Ideally, all Ingress controllers should fit the reference specification. In reality, the various Ingress controllers operate slightly differently.

How does a Kubernetes ingress controller work?

An ingress controller is not a Kubernetes native application, hence it doesn’t come with default installation we need to manually install the ingress controller to our cluster.

Various types of ingress controllers support Kubernetes such as nginx, haproxy, etc

ingress-controllers

The most commonly used ingress controller is the Nginx ingress controller.

For installing the nginx-ingress controller you can follow the below documentation.

installing-nic/installation-with-manifests.

The nginx-ingress controller is deployed as a deployment in the Kubernetes cluster, within it a pod is created which runs an nginx-ingress controller on ports 80 and 443 similar to our web server.

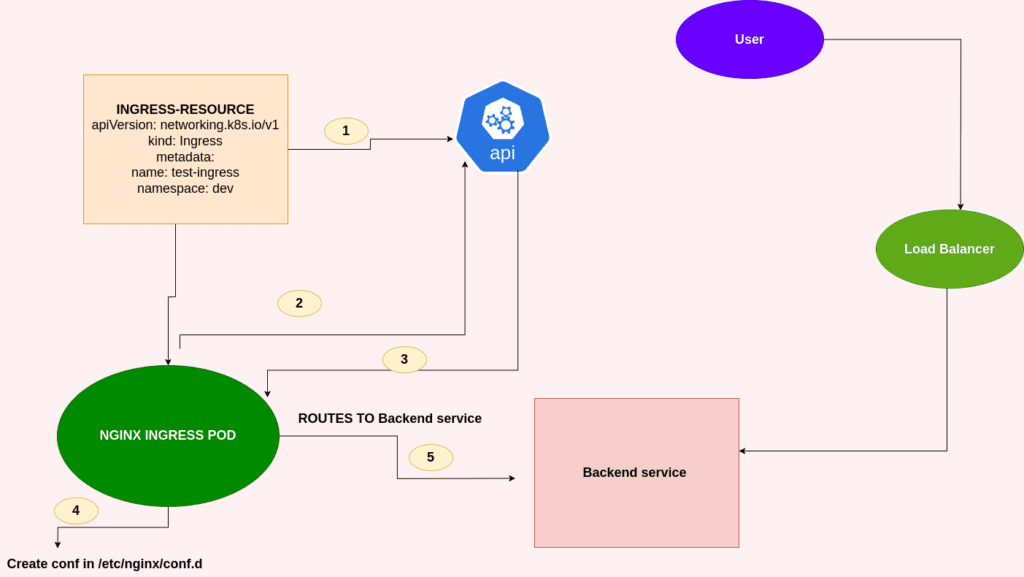

These ingress controllers have additional intelligence and they can talk to Kubernetes API whenever a new ingress resource is created it detects it and creates the confs and routes to forward the traffic to respective backend services.

If you have worked with nginx-webserver you might be familiar with its configuration.

In NGINX web server administration, the primary configuration file, nginx.conf houses global settings. When configuring new virtual hosts, individual configuration files are typically created within /etc/nginx/conf.d/, each representing a distinct virtual host configuration. Following modifications, the NGINX web server is restarted to apply the changes across the server.

Similarly in the ingress-controller also whenever we create an ingress resource it creates the necessary conf files and routes the traffic automatically.

As shown in the above image the steps included are

1: An ingress resource is created by the user with routes and maps but talking to Kubernetes API.

2: The ingress controller also monitors Kubernetes API for newly created resources and thus it identifies a new resource.

3: The ingress controller fetches the necessary information to create the exact configuration file in the nginx pod.

4: The ingress controller then creates a nginx conf by talking to the nginx-ingress controller pods with the routes and maps.

Then whenever a user requests for that particular domain say example.com/app the ingress controller routes the request to the respected backend service.

Summary:

Thus using both ingress and ingress controller you can map external traffic to your internal application pods without additional configurations and resources. Also, you can configure your SSL/TLS settings in your ingress resources to serve your domain with an SSL certificate.